BootSeer - LLM Training Startup Optimization

System-level optimization framework for reducing startup overhead in large-scale LLM training

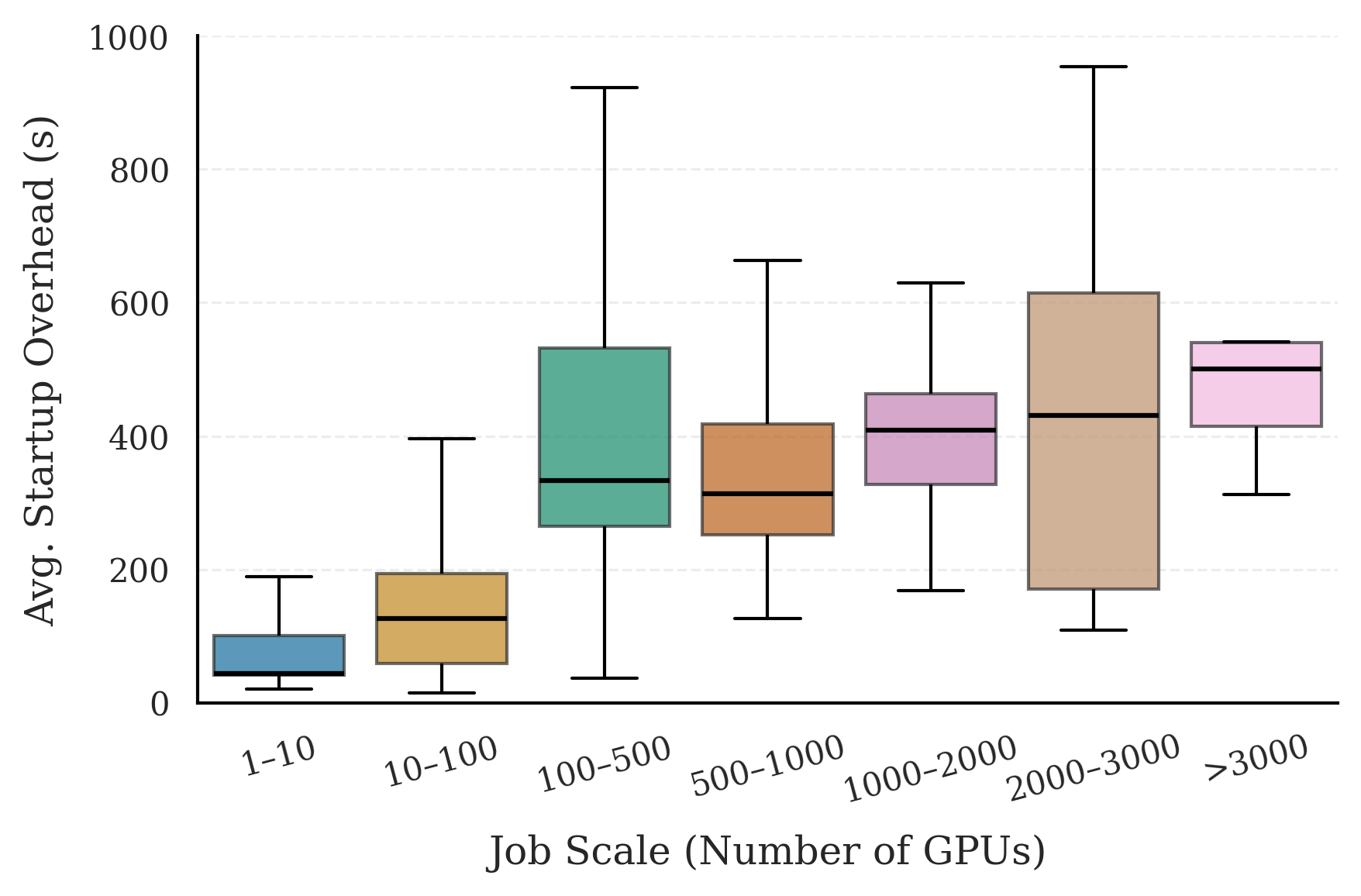

BootSeer is a comprehensive system-level optimization framework designed to address the critical issue of startup overhead in large-scale language model training. This research focuses on the delay before training jobs begin execution, which can waste significant GPU time in production environments.

Research Objectives

The primary goal is to minimize startup overhead in LLM training by:

- Startup Analysis: Characterizing the components and costs of training startup

- Bottleneck Identification: Understanding the three primary startup bottlenecks

- System Optimization: Developing techniques to reduce startup delays

- Production Deployment: Implementing solutions in real-world training clusters

Technical Approach

BootSeer addresses three key startup bottlenecks:

1. Container Image Loading

- Hot Block Record-and-Prefetch: Optimizing container image loading through intelligent prefetching

- Block-level Optimization: Reducing I/O overhead during container initialization

2. Runtime Dependency Installation

- Dependency Snapshotting: Creating pre-configured dependency environments

- Shared Dependencies: Leveraging common dependencies across training jobs

3. Model Checkpoint Resumption

- Striped HDFS-FUSE: Optimizing checkpoint loading through parallel I/O

- Checkpoint Caching: Intelligent caching of frequently accessed model states

Key Outcomes

This research has achieved:

- 50% reduction in startup overhead in production environments

- Publication at top-tier venues (arXiv:2507.12619)

- Real-world deployment in production training clusters

- Significant cost savings in GPU utilization

Impact

BootSeer’s contributions are crucial for:

- Cost Reduction: Minimizing wasted GPU time during startup

- Efficiency Improvement: Faster iteration cycles for ML teams

- Resource Optimization: Better utilization of expensive training infrastructure

- Production Reliability: More stable and predictable training workflows

Related Publications

- BootSeer: Analyzing and Mitigating Initialization Bottlenecks in Large-Scale LLM Training (arXiv:2507.12619)

Figure: The startup overhead of LLM training, previously considered negligible, now has significant impact on production training jobs. This visualization demonstrates the critical importance of addressing startup bottlenecks in large-scale language model training environments.